Immersive audio in Latin America doesn’t fail due to a lack of talent or technology.

It fails because validation processes, monitoring, and workflows were never designed for our context.

I recently launched an ongoing, targeted survey focused on active immersive audio practitioners across LATAM to map how technical decisions are validated under real world conditions.

This article presents an initial diagnosis based on 32 specialized respondents. While the data collection remains open, the high recurrence of specific workflows, infrastructure constraints, and technical frictions already reveals robust and consistent patterns that define the regional landscape.

The data confirms that working with immersive audio in Latin America is not a creative problem, but a constant technical negotiation.

- 50% of professionals access a physical immersive monitoring room less than once a year.

This disconnection from the real acoustic space creates a fragile validation scenario, where the absence of direct and verifiable references turns binaural monitoring into the only available option. Immersive audio infrastructure in LATAM is a house of cards.

Its fragility is not an isolated phenomenon, but a structural condition that runs through the entire workflow and shapes every technical decision from the very beginning of the process.- Working with generic HRTF models is equivalent to processing sound through someone else’s morphology.

The lack of individualized profiles and the lack of head tracking systems make spatial perception imprecise and generate cognitive fatigue that compromises technical judgment.

This analysis does not come from theory, but from direct observation of the infrastructure in Latin America. It is not an opinion, but a diagnosis of how the system actually operates.

When validation frameworks are fragile, technical decisions become indefensible, and what cannot be defended cannot scale. There is a widespread confusion between experimentation and real technical validation.

The professional’s insecurity does not stem from a lack of knowledge, but is the logical response to tools that were never designed for their reality.

The Validation Limbo

In Latin America, most artists and professionals work under a structural limitation that reduces access to the final exhibition space to just a few minimal instances before the premiere, as they operate primarily from their own studios on projects intended for domes or immersive theaters abroad.

This physical disconnection forces reliance on generic binaural rendering engines, which, lacking calibrated references, lose resolution and technical precision.

When there is no real acoustic environment, perception is replaced by a form of sensory substitution. Instead of trusting what we hear, we begin to trust what we see on the screen, relying on the visual information and data provided by the rendering engine.

In this scenario of acoustic myopia, mixing ceases to be an aesthetic decision and becomes an exercise in data management, prioritizing visual coherence over sonic intent.

This dynamic undermines trust in one’s own listening and makes professionals excessively dependent on what the software claims is happening.

To regain stability in the process, it is essential to establish constant reference points and rely on measurement tools that act as anchors to reality.

However, the most valuable resource is strengthening the technical dialogue between those who design the content and those who operate the physical space, because only through fluid communication about routing and the room’s real acoustic response is it possible to transform software uncertainty into solid professional decisions.

For software development and space management industries, this scenario represents a critical design opportunity.

The challenge is not only to improve rendering but also to create bridges that translate the complexity of the physical space to the workstation.

Integrating specific acoustic profiles for each room and automated communication protocols would allow companies to provide the technical stability that professionals are currently forced to improvise on their own to prevent the collapse of the spatial image.

The Fragility of the Ecosystem

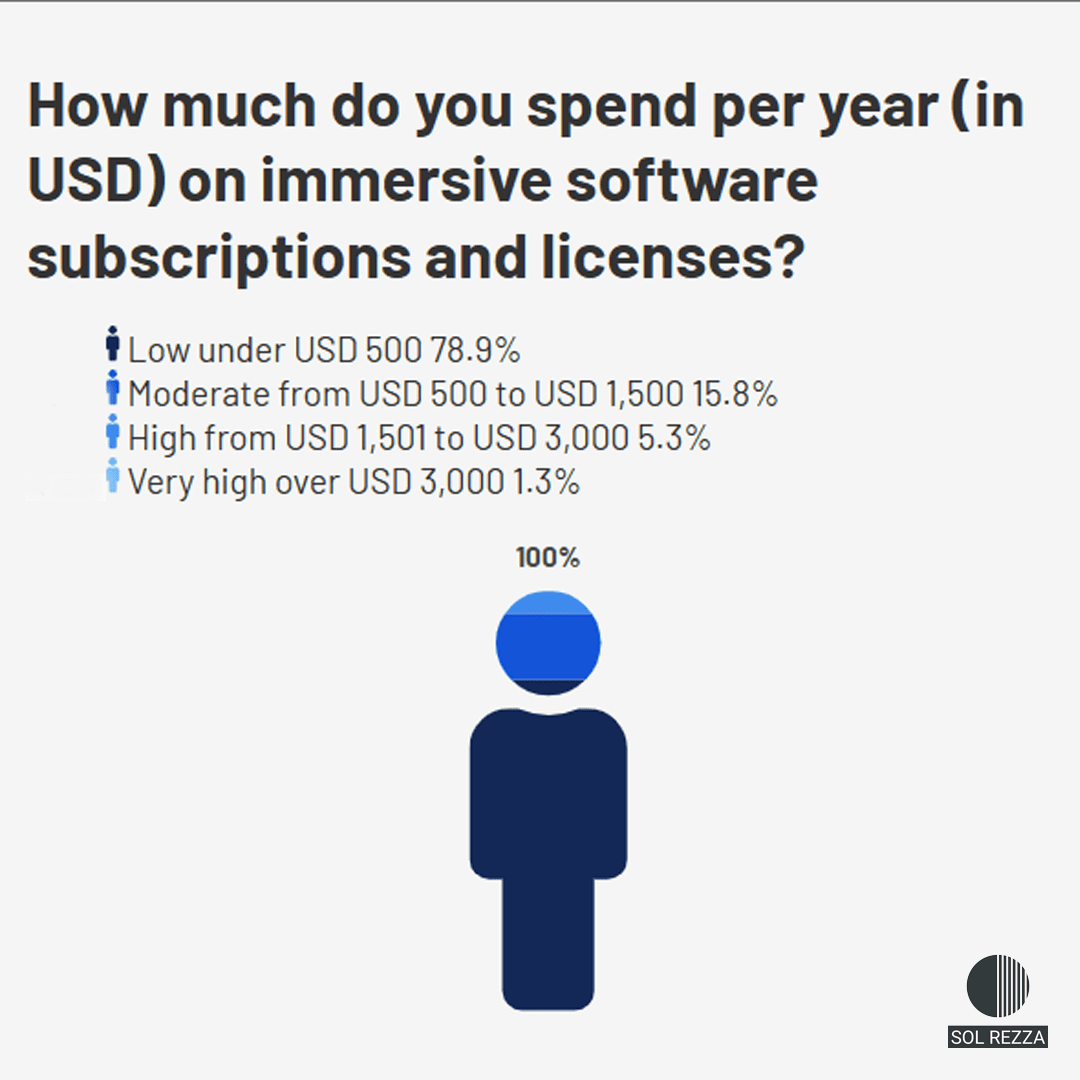

This pattern represents a statistical reality that reveals a marked segmentation within the community.

While one sector manages to sustain a stable workflow, the data shows a deep technical gap in which 42.8% of professionals describe their routing configuration as a persistent struggle, ranging from moderately to extremely difficult.

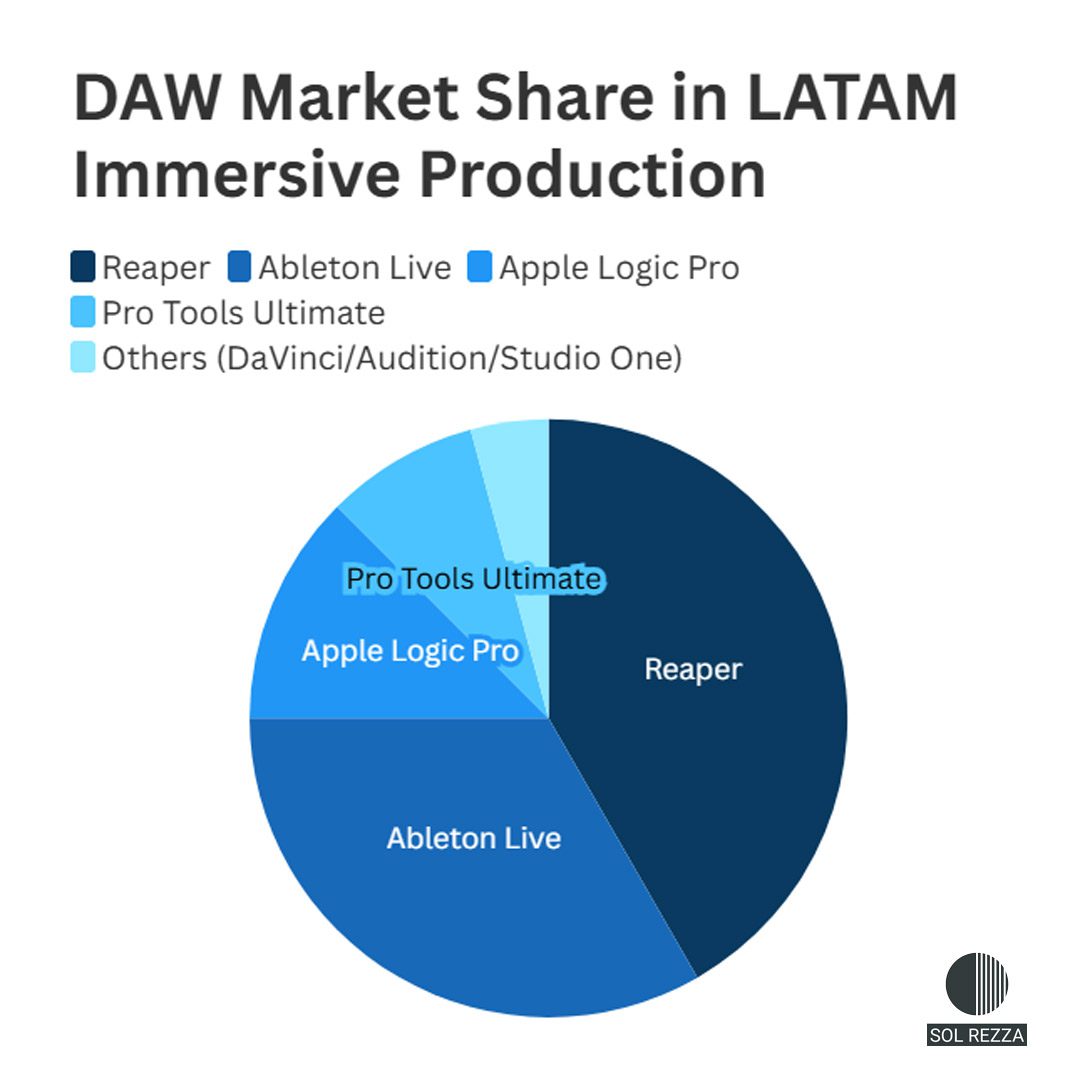

In this context of instability, 64.3% of respondents choose Reaper not simply as a creative preference, but as a fundamental strategy for technical survival.

This choice is largely driven by the lack of efficient native routing protocols in the dominant operating systems.

With half of the community using Windows as their main platform and the rest distributed across various versions of macOS and Linux, multichannel audio management depends on external bridges such as ASIO Link Pro, VB-Audio, QJackCtl, Blackhole, or Loopback.

Working under this scheme means operating without technical determinism. The audio chain becomes dependent on third-party drivers remaining updated and compatible with constant changes in rendering engines and operating system updates.

Systemic instability affects the professional’s workflow. Due to the fragility of the routing, technical experimentation is avoided in order not to risk session stability, as any technical failure interrupts the day’s work.

In Latin America, configuring an immersive working environment is, above all, a continuous exercise in risk management, where technological stability is the scarcest resource and where the success of a mix often depends on the validity of an intermediate software patch.

The HRTF Veil

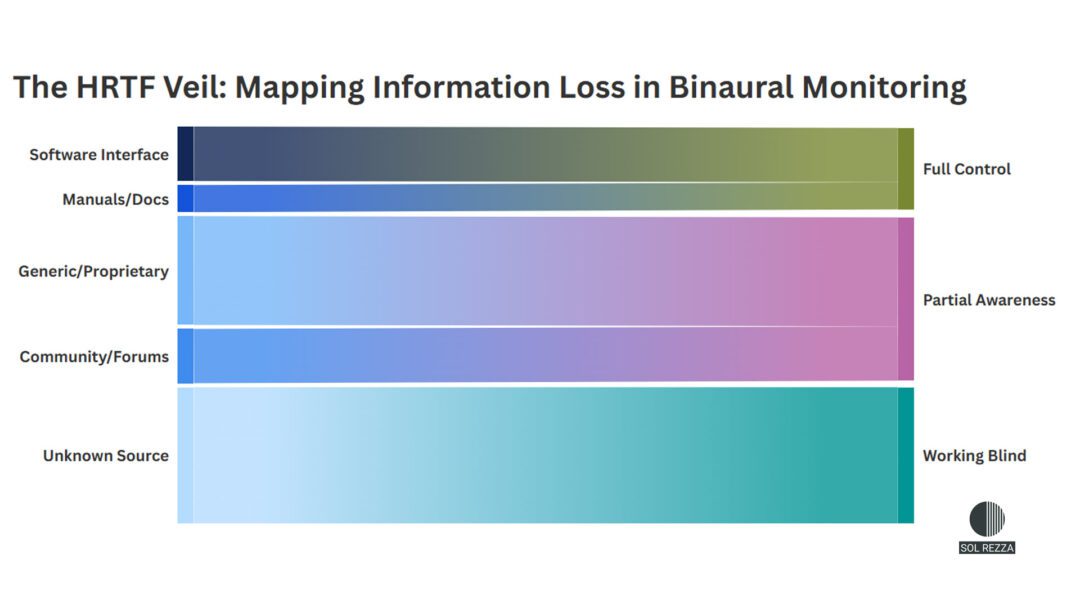

This psychoacoustic myopia manifests as a technical contradiction that the data reveals with clarity.

Although 57.1% of professionals use spatialization tools primarily for their ease of use, a large portion report critical difficulties in accurately perceiving elevation and distance.

The problem lies not only in the software, but in the imposition of an average statistical model onto individual physiology.

By operating without personalized HRTF profiles or head-tracking systems, the brain enters a state of auditory asynchrony. A dissonance emerges between what the visual interface indicates and what the cognitive system is actually able to decode.

This disconnection is deepened by a revealing finding from the survey; most users have no technical information about the HRTF profile they are using.

References such as the Neumann KU100 remain abstract concepts for professionals working with panning plugins, since they lack access to the original microphone or to the physical experience of that capture.

Given that access to tools for generating an individualized HRTF is almost nonexistent for the average user, and that there are no simple workflows to capture one’s own acoustic biometrics, professionals are forced to work with borrowed hearing.

Without knowing which filter is being applied to their own perception, control over the sound translation chain is lost. This lack of technical transparency is compounded by a systemic absence of standards, where no unified protocols or clear formats exist to define how to resolve localization inconsistencies in elevation and distance. While the horizontal plane enjoys a relative level of technical maturity, spatial decisions outside that axis lack a shared frame of reference.

Today, positions above and below the listener remain in a gray zone where each manufacturer applies proprietary and closed algorithms.

For the industry, this opacity represents a design opportunity. Opening these algorithms and enabling accessible tools for personalized capture would allow binaural to evolve from a generic simulation into a professional grade monitoring tool.

Only through transparency in these processes can the engineer regain authority over their own listening and ensure that what is designed in the virtual environment translates faithfully into the physical space.

The Technical Cost of Lacking Standards

The spatial uncertainty reported by professionals is the result of structural gaps that affect the predictability of current workflows.

Inconsistency between rendering engines represents the first technical conflict.

Although the ADM standard defines object positioning, there is no shared reference for timbral response. This lack of unified criteria causes the same object to sound different depending on the software being used and forces the engineer to compensate for system deviations instead of making creative decisions.

The implementation barrier of the SOFA format deepens this lack of coherence within digital production environments.

Although this standard was created to universalize HRTF profiles, its integration into DAWs remains unintuitive and lacks accessible tools for capturing one’s own acoustic biometrics. Without simplified loading protocols, the industry remains tied to generic profiles that impose an external auditory morphology and undermine monitoring precision.

The cone of confusion and artificial compensation emerge as the final consequence of this perceptual mismatch.

The data reveals a technical drift in which, due to the absence of standards for handling the Blauert bands, professionals resort to excessive reverberation to force a sense of spatiality that the system cannot natively guarantee. Only through protocols that prioritize human perception will it be possible to transform this instability into true technical sovereignty for the professional.

Toward a Diagnosis of Technical Sovereignty

This article does not seek to offer a conclusion, but rather a first approach to the state of regional infrastructure.

The data presented reflects an initial phase focused on the most visible structural failures, such as physical validation, ecosystem fragility, and psychoacoustic uncertainty. Critical dimensions still remain to be analyzed, including educational gaps, distribution limitations, and the economic cost of operating without shared standards.

The problem does not lie in a lack of professional competence, but in a profound asymmetry between software design and our operational reality.

What clearly emerges is that specialists make decisions within technical frameworks that prevent those decisions from being validated or transferred with precision.

As long as validation is treated as an individual responsibility instead of an infrastructure challenge, immersive audio will continue to depend on intuition and tolerance for an unstable working architecture.

Naming the problem with precision is the only way to demand solutions that truly respond to our context.

This research seeks to replace dependence on visual indicators with real confidence grounded in technical determinism and process transparency.